Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Automated Detection of Malaria-Infected Cells Using Convolutional Neural Networks

Authors: Sahil Khan, Ms. Swati Jadon, Ms. Richa Tiwari

DOI Link: https://doi.org/10.22214/ijraset.2024.63816

Certificate: View Certificate

Abstract

In this study, we developed and evaluated a convolutional neural network (CNN) model to detect malaria-infected cells from microscopic images. The dataset comprised 27,558 images categorized into \"Infected\" and \"Uninfected\" cells. The model architecture included multiple convolutional layers, max-pooling layers, and dropout layers to enhance feature extraction and prevent overfitting. Data augmentation techniques were employed to improve the model\'s generalization capability. The CNN model demonstrated high performance, achieving over 90% accuracy on both training and validation datasets. The training and validation accuracy curves showed a rapid increase in the initial epochs, followed by a plateau, indicating the model\'s robustness. The loss curves revealed a significant decrease in both training and validation loss, with the validation loss stabilizing at a lower level than the training loss due to effective regularization. This model presents a promising approach for accurate and efficient detection of malaria-infected cells, which can aid in timely diagnosis and treatment, ultimately improving patient outcomes.

Introduction

I. INTRODUCTION

Deep learning is a subfield of artificial intelligence (AI) that focuses on training artificial neural networks with multiple layers (hence “deep”) to learn complex patterns and representations from data [1-5]. These neural networks are loosely inspired by the structure and function of the human brain, with interconnected nodes (neurons) that process and transmit information. Deep learning algorithms excel at tasks like image recognition, natural language processing, and decision-making, often achieving human-level or even superhuman performance [6-7]. They learn by adjusting the connections between neurons based on feedback from data, allowing them to discover intricate relationships and features that may not be obvious to humans. This ability to learn from raw data without explicit programming has made deep learning a driving force behind many recent advancements in AI. In manufacturing, deep learning optimizes production processes, predicts equipment failures, and enhances quality control [8-13]. This leads to increased efficiency, reduced downtime, and improved product quality. In healthcare, deep learning algorithms analyze medical images with remarkable accuracy, aiding in early disease detection and personalized treatment plans. It also accelerates drug discovery and improves patient outcomes through predictive analytics. Deep learning is transforming the finance sector by powering fraud detection systems, automating risk assessments, and enabling algorithmic trading. Furthermore, it is revolutionizing customer service with intelligent chatbots and personalized recommendations, enhancing customer experiences across industries. These are just a few examples of how deep learning is reshaping industries, unlocking new possibilities, and driving innovation across diverse sectors.

Convolutional Neural Networks (CNNs) are a specialized type of deep learning architecture primarily designed for image processing and analysis. They are the backbone of many computer vision applications, including image classification, object detection, and facial recognition. CNNs are considered deep learning models due to their use of multiple layers to progressively extract higher-level features from raw image data. The core building block of a CNN is the convolutional layer, which applies filters (or kernels) to the input image to detect specific patterns like edges, corners, and textures. By stacking multiple convolutional layers, CNNs can learn to recognize increasingly complex features, from simple shapes to entire objects. These learned features are then used by subsequent layers to classify images or perform other tasks. In addition to convolutional layers, CNNs often incorporate pooling layers to reduce the dimensionality of feature maps and improve computational efficiency. Fully connected layers at the end of the network aggregate information from the preceding layers and produce the final output, such as class probabilities or bounding box coordinates. The deep architecture of CNNs, along with their ability to automatically learn relevant features from data, makes them powerful tools for image analysis tasks. They have achieved state-of-the-art performance in various applications, leading to significant advancements in fields like medical imaging, autonomous vehicles, and robotics.

Soni et al. [14] proposed the SEMRCNN model, a deep learning approach for the automatic segmentation of prostate cancer lesions in multiparametric magnetic resonance imaging (MP-MRI). The model leverages two parallel convolutional networks to extract features from apparent diffusion coefficient (ADC) and T2W images, integrating this complementary information to enhance segmentation accuracy. By incorporating squeeze-and-excitation blocks, the model automatically emphasizes relevant features in the fusion feature map, leading to improved lesion identification and segmentation. The study evaluated the SEMRCNN model on 140 instances and demonstrated its superior performance compared to other models like V-net, Resnet50-U-net, Mask-RCNN, and U-net. The SEMRCNN model achieved a Dice coefficient of 0.654, sensitivity of 0.695, specificity of 0.970, and a positive predictive value of 0.685, highlighting its potential for accurate and efficient prostate cancer detection in MP-MRI. More et al. [15] introduced an innovative denoising technique for medical images using a sparse-aware convolutional neural network (SA-CNN). This approach addresses the common issues of inadequate contrast and noise in medical imaging, which can hinder diagnosis and treatment decisions. The SA-CNN model utilizes patch creation and dictionary methods to extract information from various medical modalities. The effectiveness of the proposed framework is evaluated using quantitative measures such as peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), and mean squared error (MSE). The study also focuses on optimizing computational time to enhance efficiency and improve the visual quality of medical images. Additionally, the authors discuss the integration of the Internet of Healthcare Things (IoHT) to ensure security through vault and challenge schemes between IoT devices and servers. Anand et al. (2020) proposed a novel deep learning model, CNN-DMA, for detecting malware attacks in e-health applications within the 5G-IoT landscape. The model utilizes a Convolutional Neural Network (CNN) classifier with Dense, Dropout, and Flatten layers, achieving 99% accuracy on the Malimg dataset. This approach addresses the critical need for enhanced security in e-health applications where sensitive patient data is vulnerable to attacks.

II. PROBLEM STATEMENT

Malaria remains a significant public health challenge, particularly in tropical and subtropical regions. Traditional diagnostic methods, such as microscopic examination of blood smears, are time-consuming and require skilled technicians, which can lead to delays in diagnosis and treatment. The need for an efficient, accurate, and automated diagnostic tool is critical to improve patient outcomes and reduce the burden on healthcare systems. This study aims to develop a convolutional neural network (CNN) model capable of accurately identifying malaria-infected cells from microscopic images. By using deep learning techniques, this research seeks to enhance the speed and precision of malaria diagnosis, thereby facilitating timely medical intervention and contributing to the overall fight against malaria.

III. EXPERIMENTAL PROCEDURE

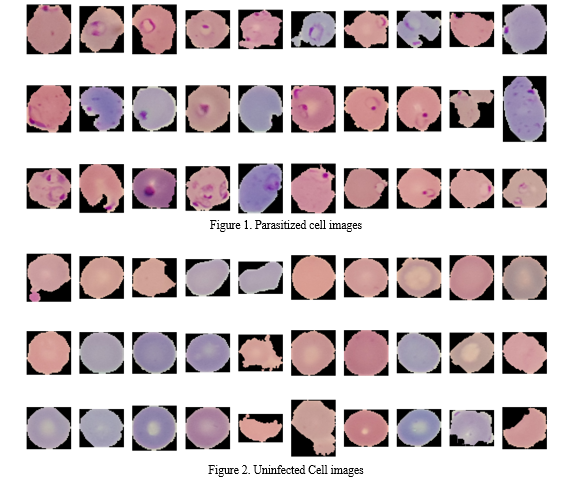

The dataset used for detecting malaria-affected cells consists of 27,558 images divided into two folders: “Infected” and “Uninfected” containing the image samples as shown in Figure 1 and Figure 2. The task is to develop a model capable of predicting whether an image belongs to the infected or uninfected category. Initially, necessary libraries were imported, including those for file operations, numerical analysis, data processing, and model building. Libraries such as NumPy, Pandas, Matplotlib, Seaborn, and TensorFlow were utilized. The data was first examined by listing the files in each category and counting the number of images in the “Parasitized” (infected) and “Uninfected” directories. The images were preprocessed and prepared for model training. This involved splitting the data into training and testing sets using the train_test_split function from Scikit-learn. A Convolutional Neural Network (CNN) model was built using TensorFlow’s Keras API. The model architecture included layers such as Conv2D for convolutional operations, MaxPooling2D for down-sampling, Flatten for flattening the input, Dense for fully connected layers, and Dropout for regularization to prevent overfitting. The model parameters were defined, and the model was initialized. Data augmentation was performed using the ImageDataGenerator class to enhance the model’s generalization capability by applying random transformations to the training images. Callback functions such as EarlyStopping and ModelCheckpoint were used to monitor the training process and save the best model based on validation performance. The model was trained using the fit method, with training and validation data generators feeding the model in batches. The training process included monitoring various metrics such as accuracy and loss. After training, the model’s performance was evaluated on the test set using metrics like confusion matrix and classification report. Finally, the training and validation metrics were plotted to visualize the model’s learning curve, showing the improvement in performance over epochs and highlighting any signs of overfitting or underfitting. This comprehensive approach ensured a robust model capable of accurately detecting malaria-infected cells from microscopic images.

IV. RESULTS AND DISCUSSION

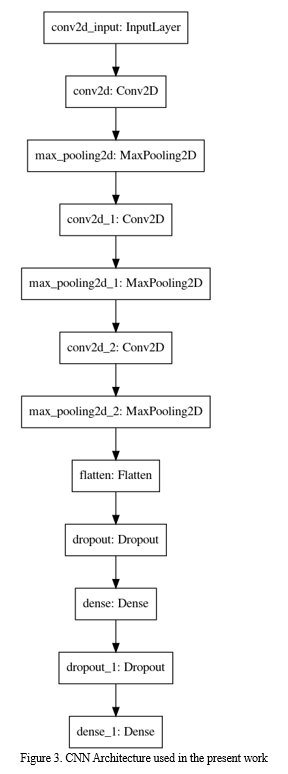

The provided plot shown in Figure 3 depicts the architecture of a convolutional neural network (CNN) model used for image classification or a similar task involving image data. The model starts with an input layer, specifically an InputLayer tailored for 2D convolutional operations (conv2d_input). Following the input layer, the network employs a sequence of convolutional and pooling layers to extract and reduce spatial features from the input images. The first layer after the input is a 2D convolutional layer (conv2d), which applies a set of convolutional filters to the input image to produce feature maps. This is followed by a max-pooling layer (max_pooling2d), which reduces the dimensionality of the feature maps by taking the maximum value over a specified window, thereby retaining the most significant features and reducing computational complexity. The model then proceeds with another convolutional layer (conv2d_1) and another max-pooling layer (max_pooling2d_1), continuing to refine the feature extraction process. A third convolutional layer (conv2d_2) and a corresponding max-pooling layer (max_pooling2d_2) follow, further extracting and condensing the features. After these convolutional and pooling operations, the output is flattened by a Flatten layer, transforming the 2D feature maps into a 1D vector. This flattened vector serves as the input for the dense (fully connected) layers, which are typically used for classification. Before entering the dense layers, a dropout layer (dropout) is applied, which randomly sets a fraction of input units to zero during training to prevent overfitting and enhance the model’s generalization capability. The first dense layer (dense) processes the flattened vector and produces a high-dimensional representation. Another dropout layer (dropout_1) follows, providing additional regularization. Finally, the output is passed through the last dense layer (dense_1), which produces the final predictions of the model.

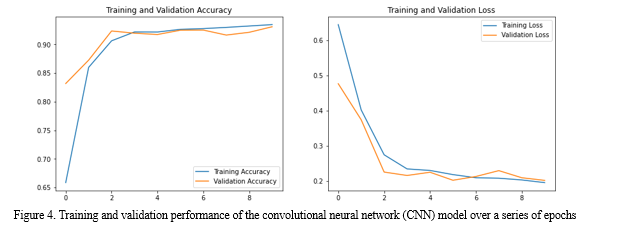

The provided plots shown in Figure 4 illustrate the training and validation performance of the convolutional neural network (CNN) model over a series of epochs. The left plot depicts the training and validation accuracy, while the right plot shows the training and validation loss. In the accuracy plot, both training and validation accuracy show a steady increase during the initial epochs, indicating that the model is effectively learning to classify the images correctly. The training accuracy starts at around 0.65 and quickly rises to above 0.90 within the first few epochs. The validation accuracy also follows a similar trend, starting slightly above the training accuracy and reaching over 0.90, indicating that the model generalizes well to unseen data during these early stages. After the initial rapid increase, both training and validation accuracy curves plateau, suggesting that the model is approaching its maximum performance. There is a slight fluctuation in validation accuracy in the later epochs, but overall, the model maintains a high level of accuracy, demonstrating its robustness. The loss plot on the right shows the training and validation loss decreasing significantly during the first few epochs, which is typical as the model learns the optimal weights to minimize error. The training loss starts at around 0.6 and drops sharply, stabilizing around 0.2. The validation loss follows a similar pattern, starting higher than the training loss but converging to a comparable value. Interestingly, the validation loss dips below the training loss in the initial epochs, which can be attributed to the regularization techniques employed, such as dropout, preventing overfitting and ensuring better generalization. The validation loss curve shows some fluctuations but remains relatively stable, mirroring the validation accuracy trends.

Conclusion

In this study, we developed a convolutional neural network (CNN) model to accurately detect malaria-infected cells from microscopic images. The model achieved high performance, with training and validation accuracies exceeding 90%, demonstrating its potential as an effective tool for malaria diagnosis. The use of data augmentation and regularization techniques proved essential in enhancing the model\'s generalization capabilities and preventing overfitting. The results indicate that our CNN model can significantly aid in the timely and accurate diagnosis of malaria, potentially reducing the reliance on traditional, labor-intensive diagnostic methods. This automated approach can facilitate quicker decision-making in clinical settings, leading to improved patient outcomes and more efficient healthcare delivery. The future scope of this research is extensive and promising. Firstly, enhancing the model by incorporating more advanced neural network architectures and fine-tuning hyperparameters can further boost performance and efficiency. Expanding the dataset to include a larger and more diverse set of images from different geographical regions and various stages of malaria infection will improve the model\'s robustness and generalizability. Real-world implementation of the model in clinical settings is another critical step, as it will provide practical insights and help identify areas for improvement. Furthermore, developing lightweight versions of the model for deployment on mobile devices and edge computing platforms can greatly enhance accessibility, particularly in remote and resource-limited areas. Finally, the techniques and insights gained from this study can be extended to the detection and diagnosis of other diseases using medical imaging, thereby broadening the impact of deep learning in healthcare. By pursuing these directions, we can significantly advance automated malaria detection systems and contribute to the overall improvement of global health outcomes through the application of artificial intelligence.

References

[1] LeCun, Y., Bengio, Y. and Hinton, G., 2015. Deep learning. nature, 521(7553), pp.436-444. [2] Tropsha, A., Isayev, O., Varnek, A., Schneider, G. and Cherkasov, A., 2024. Integrating QSAR modelling and deep learning in drug discovery: the emergence of deep QSAR. Nature Reviews Drug Discovery, 23(2), pp.141-155. [3] Dai, L., Sheng, B., Chen, T., Wu, Q., Liu, R., Cai, C., Wu, L., Yang, D., Hamzah, H., Liu, Y. and Wang, X., 2024. A deep learning system for predicting time to progression of diabetic retinopathy. Nature Medicine, 30(2), pp.584-594. [4] Herrmann, L. and Kollmannsberger, S., 2024. Deep learning in computational mechanics: a review. Computational Mechanics, pp.1-51. [5] Kalli, V.D.R., 2024. Advancements in deep learning for minimally invasive surgery: A journey through surgical system evolution. Journal of Artificial Intelligence General science (JAIGS) ISSN: 3006-4023, 4(1), pp.111-120. [6] Aguirre, F., Sebastian, A., Le Gallo, M., Song, W., Wang, T., Yang, J.J., Lu, W., Chang, M.F., Ielmini, D., Yang, Y. and Mehonic, A., 2024. Hardware implementation of memristor-based artificial neural networks. Nature communications, 15(1), p.1974 [7] Kurucan, M., Özbaltan, M., Yetgin, Z. and Alkaya, A., 2024. Applications of artificial neural network based battery management systems: A literature review. Renewable and Sustainable Energy Reviews, 192, p.114262. [8] Mishra, A., Jatti, V.S., Sefene, E.M. and Paliwal, S., 2023. Explainable Artificial intelligence (XAI) and supervised machine learning-based algorithms for prediction of surface roughness of additively manufactured polylactic acid (PLA) specimens. Applied Mechanics, 4(2), pp.668-698. [9] Mishra, A., Jatti, V.S. and Paliwal, S. eds., 2024. Sustainable Materials: The Role of Artificial Intelligence and Machine Learning. CRC Press. [10] May, M.C., Neidhöfer, J., Körner, T., Schäfer, L. and Lanza, G., 2022. Applying natural language processing in manufacturing. Procedia CIRP, 115, pp.184-189. [11] Jia, Y., She, L., Cheng, Y., Bao, J., Chai, J.Y. and Xi, N., 2016, August. Program robots manufacturing tasks by natural language instructions. In 2016 IEEE International Conference on Automation Science and Engineering (CASE) (pp. 633-638). IEEE. [12] Costa, A.P., Seabra, M.R., de Sá, J.M.C. and Santos, A.D., 2024. Manufacturing process encoding through natural language processing for prediction of material properties. Computational Materials Science, 237, p.112896. [13] Shen, S., Zhu, C., Fan, C., Wu, C., Huang, X. and Zhou, L., 2021. Research on the evolution and driving forces of the manufacturing industry during the “13th five-year plan” period in Jiangsu province of China based on natural language processing. PLoS One, 16(8), p.e0256162. [14] Soni, M., Khan, I.R., Babu, K.S., Nasrullah, S., Madduri, A. and Rahin, S.A., 2022. Light weighted healthcare CNN model to detect prostate cancer on multiparametric MRI. Computational Intelligence and Neuroscience, 2022(1), p.5497120. [15] More, S., Singla, J., Verma, S., Ghosh, U., Rodrigues, J.J., Hosen, A.S. and Ra, I.H., 2020. Security assured CNN-based model for reconstruction of medical images on the internet of healthcare things. IEEE Access, 8, pp.126333-126346. [16] Anand, A., Rani, S., Anand, D., Aljahdali, H.M. and Kerr, D., 2021. An efficient CNN-based deep learning model to detect malware attacks (CNN-DMA) in 5G-IoT healthcare applications. Sensors, 21(19), p.6346.

Copyright

Copyright © 2024 Sahil Khan, Ms. Swati Jadon, Ms. Richa Tiwari. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63816

Publish Date : 2024-07-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online